Here is an article about image measurements, how an image is measured depending on the circumstances, whether a monitor image, a printed image or a scanned image, and how the different measurements have led to confusion between the concepts of dot and pixel, both basic units but for totally different purposes.

Image Measures

As mentioned in the previous post, here are four relevant indicators of image measures and resolution – PPI, DPI, LPI and SPI.

|

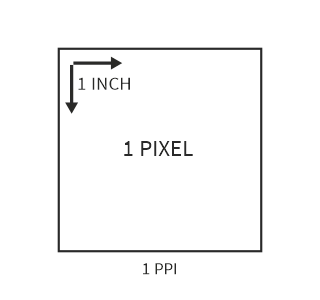

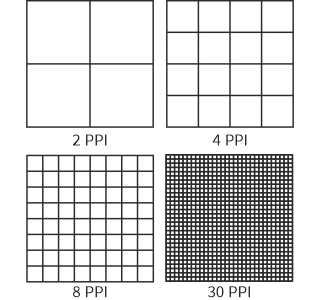

► PPI – Pixels Per Inch

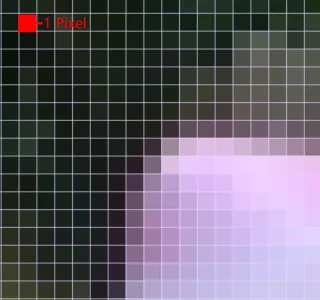

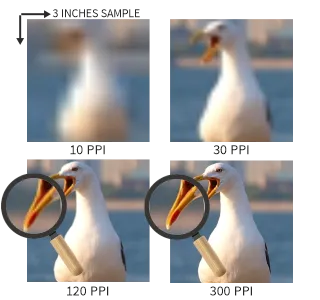

PPI stands for Pixels Per Inch. The term PPI refers to the actual resolution of the image, as a virtual image, on a monitor. When working on the image on the computer, in any image editing program, we are working in PPI.

PPI is also involved in the method of calculating print size relative to the number of pixels in the image, since print media images are not measured in pixels but in metric system units or inches. The calculation is obtained by dividing both image measurements (pixel width and height) by the resolution (PPI). As explained in the next paragraph, this gives rise to a mixing between PPI and DPI but this mixing also confuses this aspect itself, because even sounding brands from the graphic and photography world apply DPI in this calculation, again mixing dot with pixel, or physical with virtual.

► DPI – Dots Per Inch

DPI refers to Dots Per Inch. In this case, we are already looking at the unit of measure for converting the virtual image into the physical one, hence the change from pixel to dot because printers use dots to reproduce a single image pixel. However, many software manufacturers substitute the reference PPI for DPI, and if you do a search on these acronyms, you will find a real battle of theses, definitions and justifications for this confusion. You must be aware that the concept of a pixel is much more complex and interesting than the generalized descriptions.

A pixel is not a simple linear unit that corresponds to that tiny square of the image when magnified to its maximum. The generic graphic representation of the little square on the display is nothing more than a model adopted by the creators of graphic cards as a way to simplify the visual representation of the pixel, which in reality has no defined form and somehow it would have to be represented. The true notion of pixel may be one of the reasons for this confusion between PPI and DPI, since a pixel corresponds to a sample of a certain point of the image and in a color image this pixel may even contain three samples, one for each primary color (read Image And Colors).

But what generally happens is to take DPI as a measure of output device and in this case almost all devices fit – not only printers, but video-projectors, cameras (etc.) – and this will be the main reason for manufacturers to ignore the PPI, adopting DPI as a general unit of resolution, without distinguishing physical image from virtual image.

But this concept of output device gets confused with another notion of simple output also used to distinguish PPI from DPI, where PPI is considered an input measure and DPI an output measure, and in this case the only output devices are printers and imagesetters, because they are the only devices through which the images cannot return to the monitor in the same way, and according to this logic all other devices are considered input pathways, even if some have dual pathways (input and output), as is the case with photographic cameras, for example.

|

Moreover, we must take into account that the reproduction process of the printed image is quite different from the reproduction process of the virtual image and therefore it makes sense the distinction between PPI and DPI, not least because the resolution of the virtual image does not always correspond to the resolution of the printer, right? I can print an image with a resolution of 1200 PPI on a printer that prints at a maximum resolution of 600 DPI, for example. Because the 1200 PPI refers to the definition of the image and the 600 DPI refers to the quality of the print, two different aspects. If I call everything DPI, you end up losing this notion. DPI was actually created for the exclusive scope of printing, as a hardware reference, so replacing PPI with DPI is a simplistic choice, to say the least, and one that many schools and professionals consider crude and wrong.

|

Nowadays, the wide variety of existing printer models makes everything even more confusing. Evolution has multiplied options and nomenclatures in function of increasingly improved results and ease of use. Both in home printers and in many professional printing equipment, instead of DPI there are options such as Fine, Coarse, Photo, High, Low, Document, Image, etc.

However, most of these options do not refer to the number of dots per inch, but to the way of reading color and contrast, as well as the type of dot applied as a way to improve the final result of the printed image, since most printers only have one to three real DPI options (usually 300, 600 and 1200 in laser printers and higher resolutions in inkjet printers, from 720 to 5000 and more, but each printer has only two or three options, and often only one), making this whole distinction even more difficult.

Nowadays, printers also come with several print size options for the same image, the user simply chooses the desired size and the machine does all the calculations according to this choice. However, at no time does DPI interfere with the number of pixels in the image. DPI only changes the number of dots that the printer will apply to represent a single pixel on the substrate. When the image is converted to the printer what happens is a resizing of the pixel, bigger or smaller, according to the output size defined by the user.

|

|

|

|

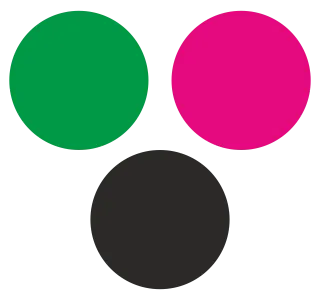

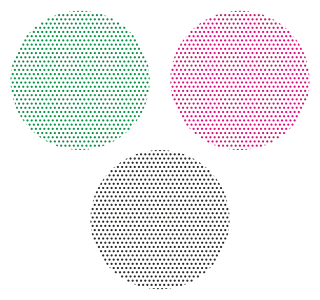

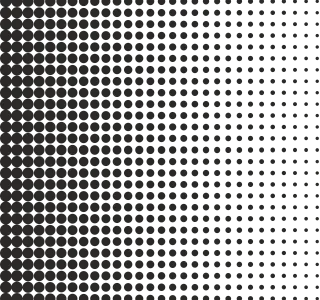

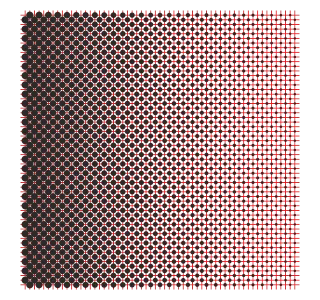

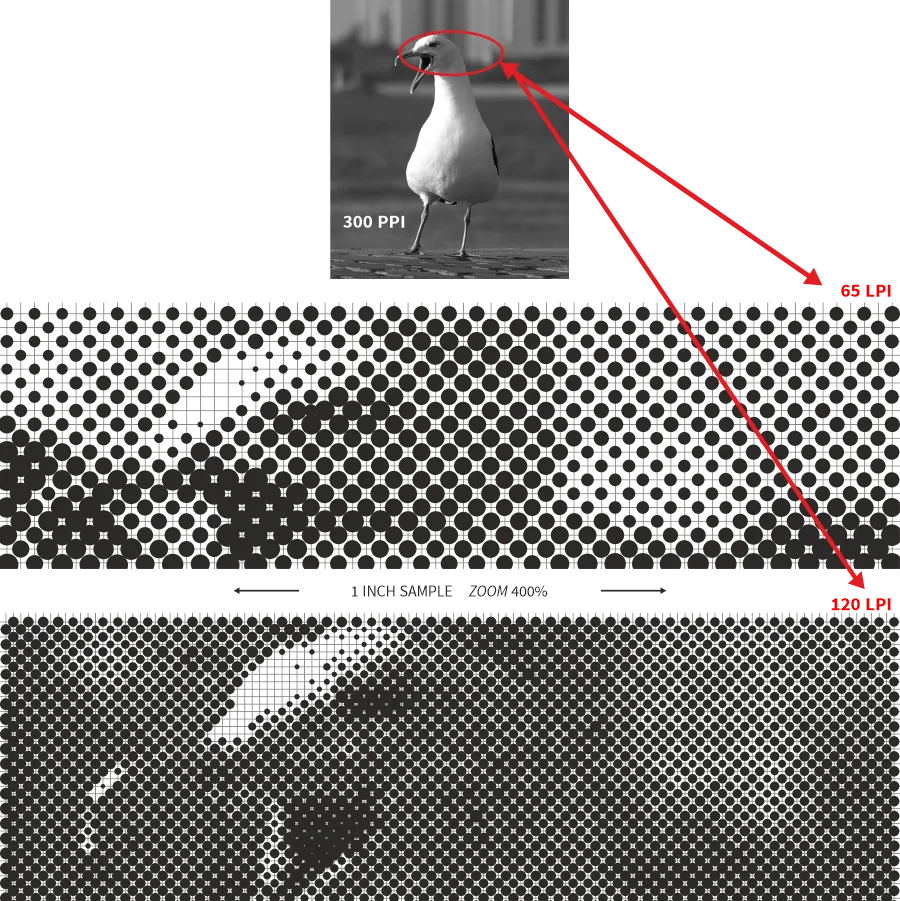

► LPI – Lines Per Inch

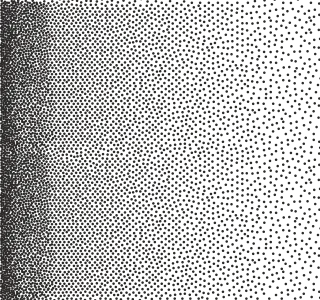

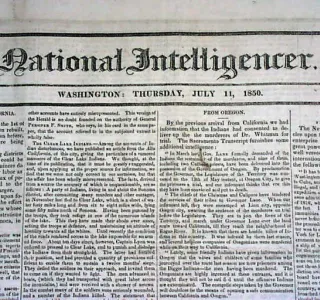

LPI refers to Lines Per Inch. Here we are dealing with another measure related to printing and which is mostly used in commercial and industrial printing processes, in halftone printing – typically in screen printing, laser printing, and offset printing. The halftone technique corresponds to a type of reproduction that simulates continuous tone images through the use of dots, varying them in size or spacing, thus generating an effect similar to a gradient or light and shadow effect for the same tone. The halftone photomechanical process emerged sometime in the 19th century for the purpose of reproducing photographs in newspapers of the time. Prior to this, any typographic reproduction was limited to black and white areas on the paper – outlined, opaque, and overall rather crude-looking characters and illustrations (100% opaque, dotless patches of print).

The halftone technique requires equipment capable of reproducing in amplitude modulated grid. Today, for example, in the specific case of inkjet printers, even in a professional or commercial environment, halftoning is not applicable because these printers tend to print by modulated dot frequency rather than amplitude.

In the case of laser printers, linescreen generally ranges from 50 to 110 LPI, depending on the printer characteristics and type of paper. The user should keep in mind that the LPI reference is intended for high production printing techniques and specific substrates, so most laser printers, especially those intended for office, home use and even a considerable professional range do not allow the linescreen to be chosen, only the resolution. In this case, linescreens can be simulated using image editing programs.

The LPI is the number of lines per inch, which in turn are made up of dots, and the more lines you have in the image, the smaller the dots and the greater the definition. LPI is not exactly a measure of resolution, since resolution implies constant dot or pixel size on the same screen or image (DPI and PPI) and in LPI we have variable dot sizes on the same screen. Therefore, when we talk about LPI, we talk about screen frequency and not resolution.

The linescreen also depends on the printing process and also on the substrate characteristics. Currently, printing companies work with linescreens that vary from 55 to 85 LPI in the case of newspaper printing; from 100 to 120 LPI in the case of magazines, and from 120 to 200 LPI for higher quality prints, for laser and offset.

In screen-printing, the advisable linescreen varies between 45 and 65 LPI. Screens with linescreen between 90 and 185 threads per square centimeter are intended for halftone printing. This is because the halftone technique started precisely by being applied through a gauze screen, by its inventor – William Talbot – when he realized that it would be necessary to reticulate the image to be able to hold the ink in the most extensive areas of the recorded image.

|

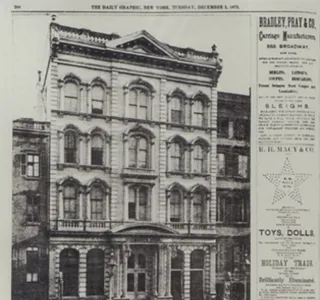

Monitor versus Print at 10 PPI

Bad quality image when printed because image pixels are resized (not increased in number) according to the user-defined output dimensions.

Monitor versus Print at 300 PPI

Example where monitor image equals printed image quality and that is why 300 PPI has been internationally agreed upon as the high-resolution standard for printing, not only because the print size matches the monitor size (this way there is no pixel magnification, maintaining image sharpness), but also because it is the resolution that covers all types of printing up to 200 LPI.

► SPI – Samples Per Inch

There is also this other resolution reference called Samples Per Inch (SPI). This measurement is exclusively linked to scanners because a scanner is a specific input device that captures images differently than a still camera. SPI translates to the optical resolution of the scanner and is related to the color depth of the scanned object, which is translated into bits (read Image And Color).

The scan quality is defined by the number of color samples that the scanner’s optics are capable of capturing per linear inch. This capacity varies from scanner to scanner, according to type and gamut, so it happens that many low-end scanners claim to capture millions of colors per inch, when in fact they have no real optical capacity, they only have a mode capable of simulating millions of colors which, obviously, does not correctly translate the real colors of the scanned object (similar to what happens between optical and digital zoom, i.e. everything that is simulated does not have the quality of the real thing).

Once again, the SPI reference is often wrongly replaced by DPI in the software interface, similarly to what happens between DPI and PPI, which in this case makes even less sense, since scanners are not output or even mixed devices (input/output devices), they are typical input devices.

|

Find references and more knowledge in Learning Links

So interesting!

Indeed! Printing world is super interesting. Thank you for coming back! Happy New Year! 💯 🍀 🌞 🥂

Happy New Year to you, too🌷❣️🌷